[ad_1]

Artistic professionals are more and more being requested to discover ways to immediate programs utilizing textual content, questions, and even coding to persuade synthetic intelligence to conjure ideas. Sidelining conventional instruments, digital or bodily, the method can generally really feel like navigating a room you’re acquainted with, however with the lights flickering and even utterly turned off. However what in case you may work together with a generative AI system on a way more human degree, one which reintroduces the tactile into the method? Zhaodi Feng’s Promptac combines the “immediate” with the “tactile” in identify and in apply with an intriguing interface utilizing human sensations that don’t really feel fairly so…synthetic.

These items could appear to be uncommon items of pastel-hued pasta, however connected to an RFID sensor, every half operates as a tactile interface part, permitting customers to govern generated digital objects by sampling colours or textures, making use of stress and motion.

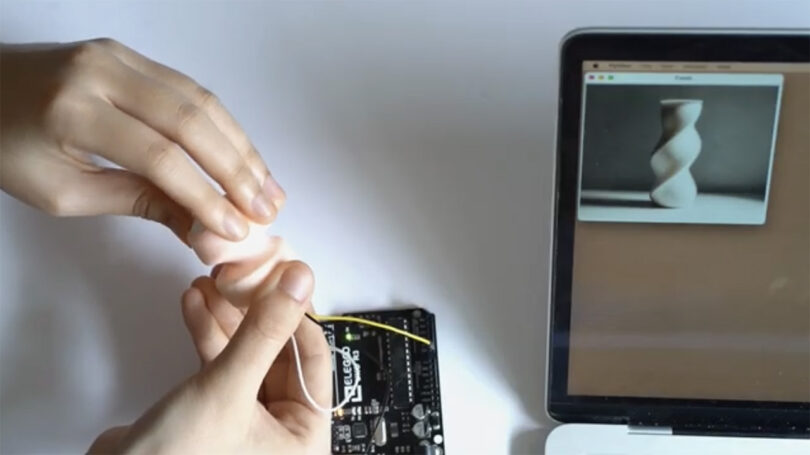

Designed for the Royal School of Artwork Graduate Present in London, the uncovered wires of Feng’s system could talk a science experiment vibe. However watching the Arduino-powered idea in use, the system’s potential appears instantly relevant. Promptac illustrates how designers throughout a mess of disciplines could sooner or later be capable to alter colours, textures, and supplies dynamically onto digital objects created by generative AI with a level of physicality presently absent.

Through the tech demo Feng makes use of the Promptac to bodily manipulate a digital vase, twisting the thing right into a spiral utilizing a tactile rubbery knob interface.

The design course of with Promptac begins very similar to some other pure language AI prompting system, beginning with a fastidiously worded request to supply a desired object to make use of as a canvas/mannequin. From there the generated picture’s shade, form, dimension, and perceived texture could be manipulated utilizing a wide range of oddly-shaped “hand manipulation sensors.”

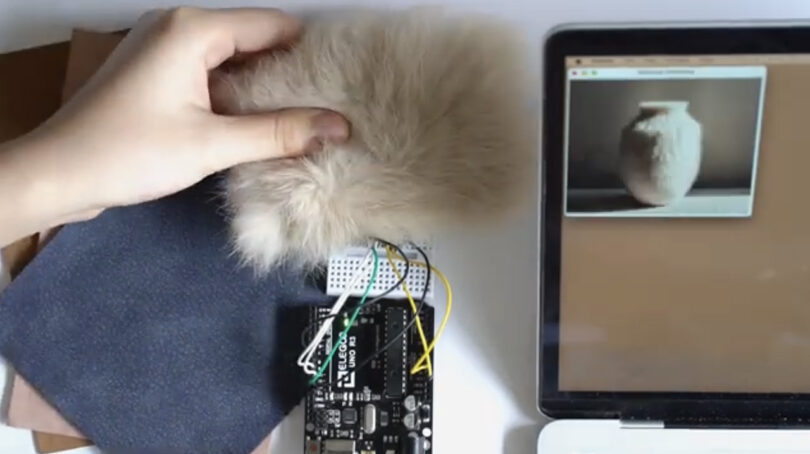

Textures and hues could be altered sampling actual world swatches of shade and even bodily supplies. Feng is proven making use of a shag floor throughout a vase by sampling a swatch of the furry materials.

Feng believes by lowering a reliance on textual content prompts, Promptac also can assist people with disabilities work together with generative AI instruments.

The potential turns into clearly extra evident observing the Promptac in motion:

To see extra of Zhaodi Feng’s work for the Royal School of Artwork Graduate Present, go to her pupil venture submissions right here.

[ad_2]

Source link